What Engineers and Policymakers Need to Know about the ChatGPT Revolution

The Institution of Engineering and Technology (IET)

Is the engineering profession on the brink of a major disruption? With the rise of ChatGPT and other AI-powered technologies, engineers are set to benefit from unprecedented levels of productivity. AI-literacy and transparency are critical to getting it right.

ChatGPT is not the only, nor the first artificial intelligence (AI) system that can mimic human text production. It belongs to a class of large language models (LLMs) which generate text by calculating the probability that one word follows another in a given context. Google, Meta, and Microsoft have each released their own LLMs, and the further development of these technologies is moving more rapidly than ever before.

By 2030, most of us will be using ChatGPT-like systems as digital ‘assistants’ in our daily working lives – this will accelerate productivity by reducing the time engineers spend on administrative tasks such writing e-mails, report writing, and drafting presentations.

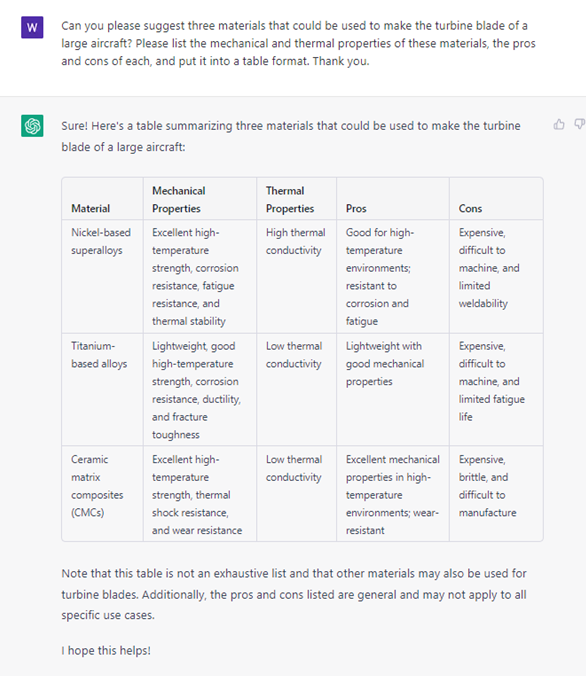

ChatGPT could also be used to provide engineers with technical briefings on nearly any topic, which could help speed-up the learning process at the start of new projects. For example, in matter of seconds, ChatGPT can make suggestions about the materials one might use for the turbine blade of large aircraft, alongside specified technical information in the desired format (Fig. 1). While this is rather elementary example, the general construction of the query could be applied to nearly any specified engineering context.

Fig 1. ChatGPT is asked about the materials in a ‘turbine’ blade

Fig 1. ChatGPT is asked about the materials in a ‘turbine’ blade

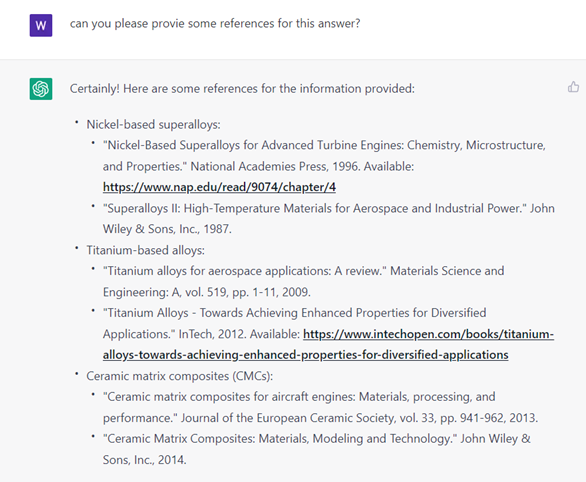

As impressive as this is, it actually illustrates a couple of profound limitations of the present technology. Firstly, the information in Fig 1 is ‘wrong’ for our stated purpose. The chatbot has confused a ‘turbine’ blade for ‘fan’ blade and returned inappropriate suggestions for materials. Secondly, when asked to provide references for this information, it generates plausible-sounding sources that are completely fake (Fig. 2). When ChatGPT does not know an answer, it is compelled to offer the wrong one.

Fig 2. ChatGPT is asked to give references for its answer

Fig 2. ChatGPT is asked to give references for its answer

It is critical that all engineers become AI literate. The above example demonstrates the importance of using chatbots correctly and with caution. More specific questions will elicit more accurate answers. Moreover, ChatGPT – in its current form – should only be used as a starting point for seeking new information. The technical information generated by ChatGPT should always be subjected to a ‘sense check’.

ChatGPT is an impressive technology which is still limited in several ways:

- ChatGPT does not ‘know’ the truth – rather, ChatGPT makes predictions about the choice and order of words based on texts it has read.

- ChatGPT cannot cite sources – for example, in our table of turbine blade materials, there is no genuine source which can be cited for that information.

- ChatGPT cannot draw new conclusions or insights – ChatGPT uses previously seen data to generate responses, and so what we believe are ‘insights’ are in fact references to previously seen information.

- ChatGPT cannot do maths or logic – ChatGPT is not aware of maths rules and can only give ‘probable’ answers which may not correct.

- ChatGPT may be biased – AI systems are susceptible to amplifying biases encoded in the data they were trained on. Attempts to rectify these biases may lead to overcorrection.

Given these limitations, greater transparency is needed around the operation and use of LLMs. Transparency is essential to maintaining trust that these systems can deliver benefits in the areas in which they excel. Currently, the ChatGPT interface offers only a very limited disclaimer of the software’s limitations. Our recommendation is that LLMs offer comprehensive, visible guidance to ensure that users can leverage these technologies effectively.

In addition, greater transparency is needed around the data used to train LLMs. The use of ChatGPT for enterprise poses legal risks if the intellectual property rights of the data cannot be traced. The UK government should address this lack of clarity as legislation to regulate AI is introduced in the coming years.

Finally, it is worth stressing that ChatGPT in its current form is modest compared to what is coming. Many of the above-described limitations will be overcome. For example, Bing’s LLM is already integrating a ‘citation’ function that can accurately identify the source of answers.

The performance of AI-powered technologies is doubling every 6-12 months. Right now, ChatGPT can do a reasonable, if imperfect imitation of an engineering student in an exam setting – as long as maths is not involved. The next generation of sector-specific LLMs with added functions will be increasingly difficult to distinguish from the real thing.

Authored by the IET Innovation & Skills panel with contributions from Natalia Konstantinova (independent AI expert).